*Equal Contributions, ✞Project Leader ,†Corresponding author

Visual-motor policy learning has advanced with architectures like diffusion-based policies, known for modeling complex robotic trajectories. However, their prolonged inference times hinder high-frequency control tasks requiring real-time feedback. While consistency distillation (CD) accelerates inference, it introduces errors that compromise action quality. To address these limitations, we propose the Score and Distribution Matching Policy (SDM Policy), which transforms diffusion-based policies into single-step generators through a two-stage optimization process: score matching ensures alignment with true action distributions, and distribution matching minimizes KL divergence for consistency. A dual-teacher mechanism integrates a frozen teacher for stability and an unfrozen teacher for adversarial training, enhancing robustness and alignment with target distributions. Evaluated on a 57-task simulation benchmark, SDM Policy achieves a 6x inference speedup while having state-of-the-art action quality, providing an efficient and reliable framework for high-frequency robotic tasks.

Our method distills the Diffusion Policy, which requires long inference times and high computational costs, into a fast and stable one-step generator. Our SDM Policy is represented by the one-step generator, which requires continual correction and optimization via the Corrector during training, but relies solely on the generator during evaluation. The corrector's optimization is based on two components: gradient optimization and diffusion optimization. The gradient optimization part primarily involves optimizing the entire distribution by minimizing the KL divergence between two distributions, \( P_{\theta} \) and \( D_{\theta} \), with distribution details represented through a score function that guides the gradient update direction, providing a clear signal. The diffusion optimization component enables \( D_{\theta} \) to quickly track changes in the one-step generator’s output, maintaining consistency. Details on loading observational data for both evaluation and training processes are provided above the diagram. Our method applies to both 2D and 3D scenarios.

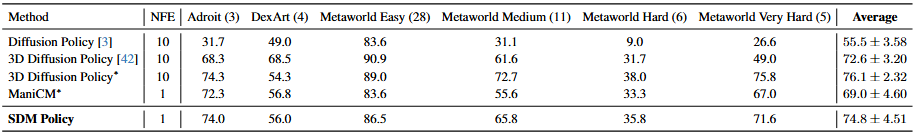

We evaluated 57 challenging tasks using 3 random seeds and reported the average success rate (%) and standard deviation for three domains. \( ∗ \) indicates our reproduction of that task, and indicates that the data for this method has not been disclosed. Our SDM Policy outperforms the current state-of-the-art model in one-step inference, achieving better results than Consistency Distillation and coming closer to the performance of the teacher model, which demonstrates its effectiveness.

We sampled 10 simulation tasks and presented the learning curves of our SDM Policy alongside DP3 and ManiCM. SDM Policy demonstrated a rapid convergence rate. In contrast, ManiCM showed slower learning progress, and DP3’s convergence speed was also slower than our method.

We evaluated 57 challenging tasks using 3 random seeds and reported the average speed (Hz) for three domains. \( ∗ \) indicates our reproduction of that task. Our SDM Policy outperforms the current state-of-the-art model in one-step inference, achieving better results than Consistency Distillation, providing strong evidence of the effectiveness of our model.

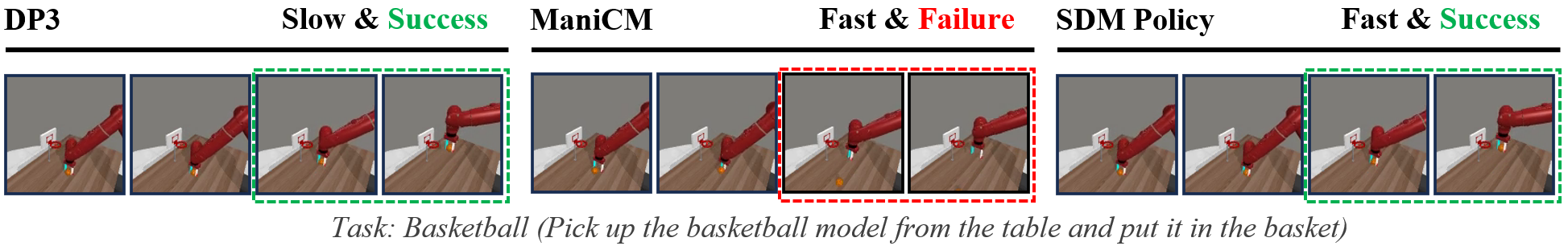

Visualized keyframes for some tasks. We found that during training, our SDM Policy could better complete the task and learn more precise actions, while consistency distillation might lead to task failure.

We would like to thank the members of the Machine Intelligence Lab at Westlake University for fruitful discussions, helps on experiments, and support. Our code is generally built upon: 3D Diffusion Policy, ManiCM, DMD, Diffusion Policy, DexArt, VRL3, MetaWorld. We thank all these authors for their nicely open sourced code and their great contributions to the community.

@article{jia2024scoredistributionmatchingpolicy,

title={Score and Distribution Matching Policy: Advanced Accelerated Visuomotor Policies via Matched Distillation},

author={Jia, Bofang and Ding, Pengxiang and Cui, Can and Sun, Mingyang and Qian, Pengfang and Huang, Siteng and Fan, Zhaoxin and Wang, Donglin},

journal={arXiv preprint arXiv:2412.09265},

year={2024}

}